Design Deep Dive

Problem 2: Data Tsunami

How I designed a system that helps engineers find a single "needle" of insight in a 100-terabyte haystack?

The context

As connected systems mature, they begin to generate data at a scale that outpaces human understanding. Modern vehicles are a clear example. Advances in sensing technology introduced cameras, radar, LiDAR, thermal sensors, and high-frequency operational data. Each vehicle began producing an overwhelming stream of telemetry.

What initially felt like progress quickly became a liability. Customers were ingesting millions of data points per minute, far beyond what teams could realistically interpret, store, or act on.

The problem was not access to data. It was the absence of intent in how data was collected.

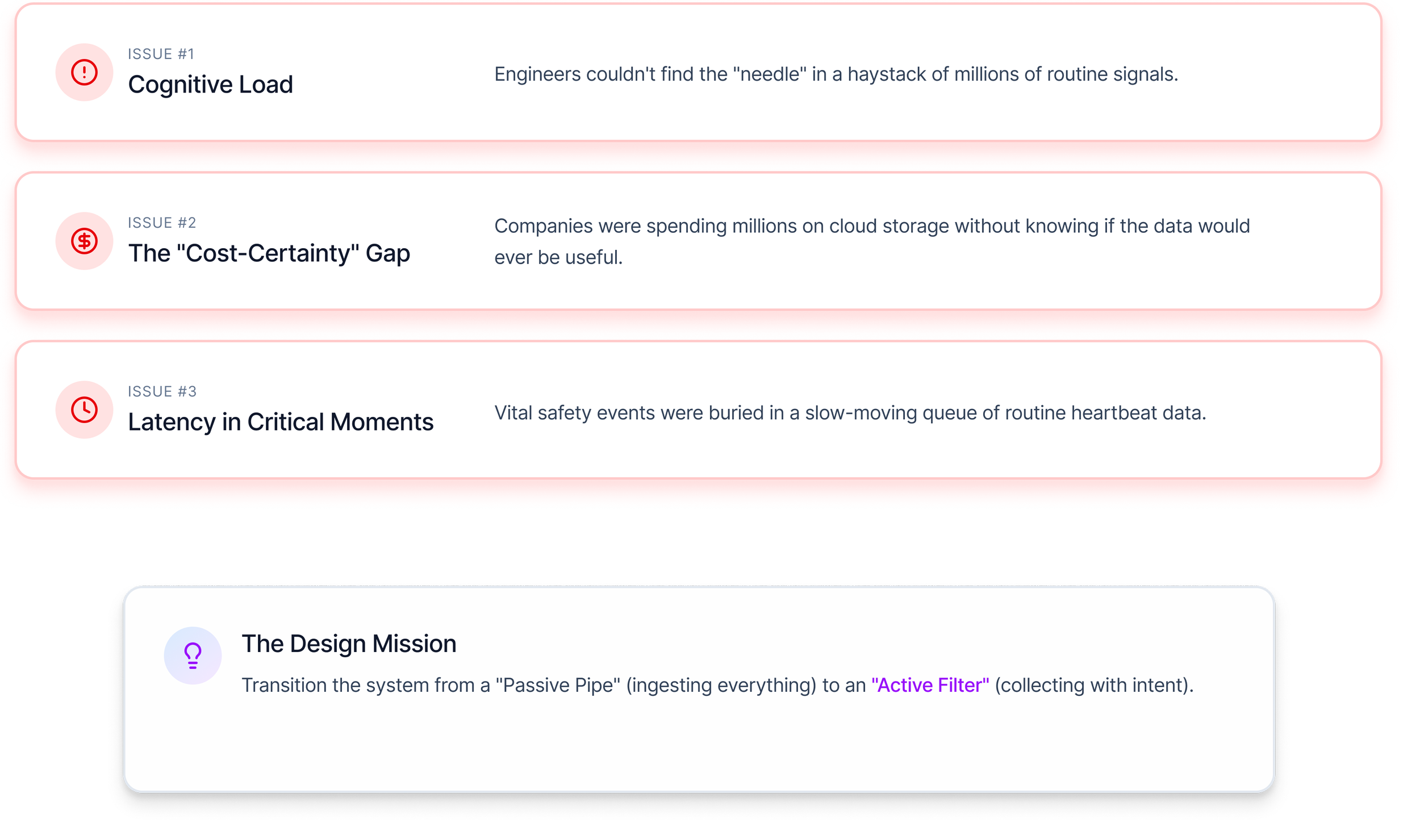

The problem

As vehicles evolved into "computers on wheels" (LiDAR, Radar, Thermal), the volume of telemetry skyrocketed. Customers were ingesting millions of data points per minute, a literal "Data Tsunami."

Investigating the problem

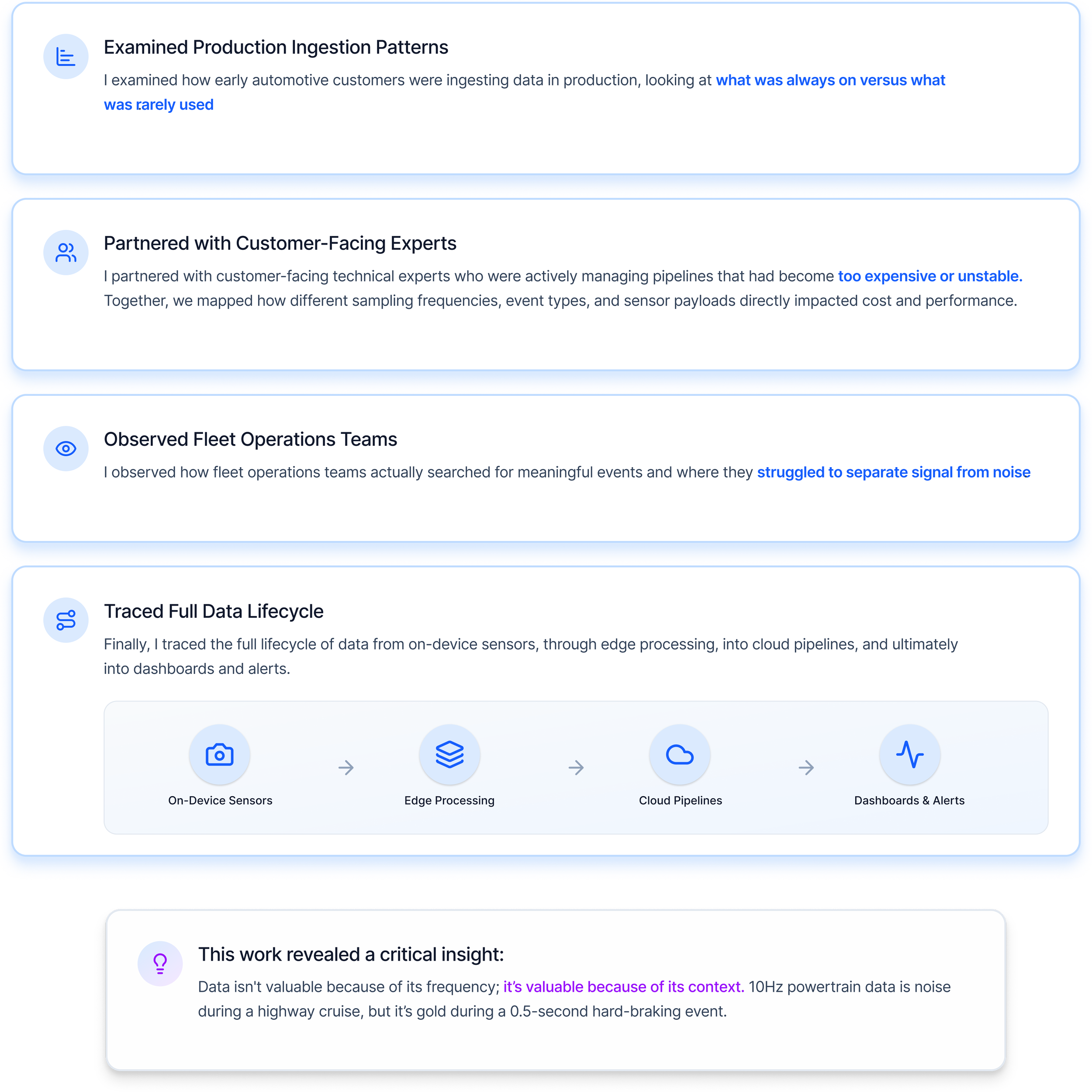

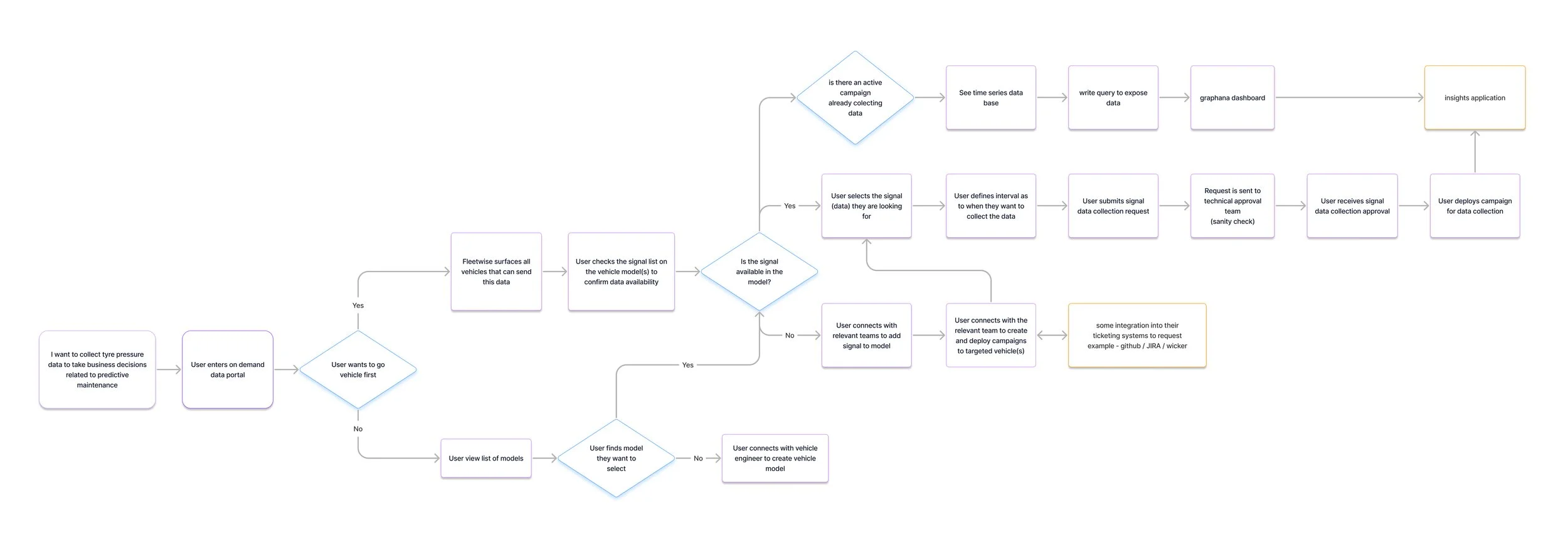

To understand the issue at both a technical and operational level, I stepped outside design artifacts and into real system behavior. I interviewed Fleet Managers and Data Scientists to understand how they actually work. I mapped the End-to-End Data Journey.

Key insights

Three insights reshaped how we approached the problem:

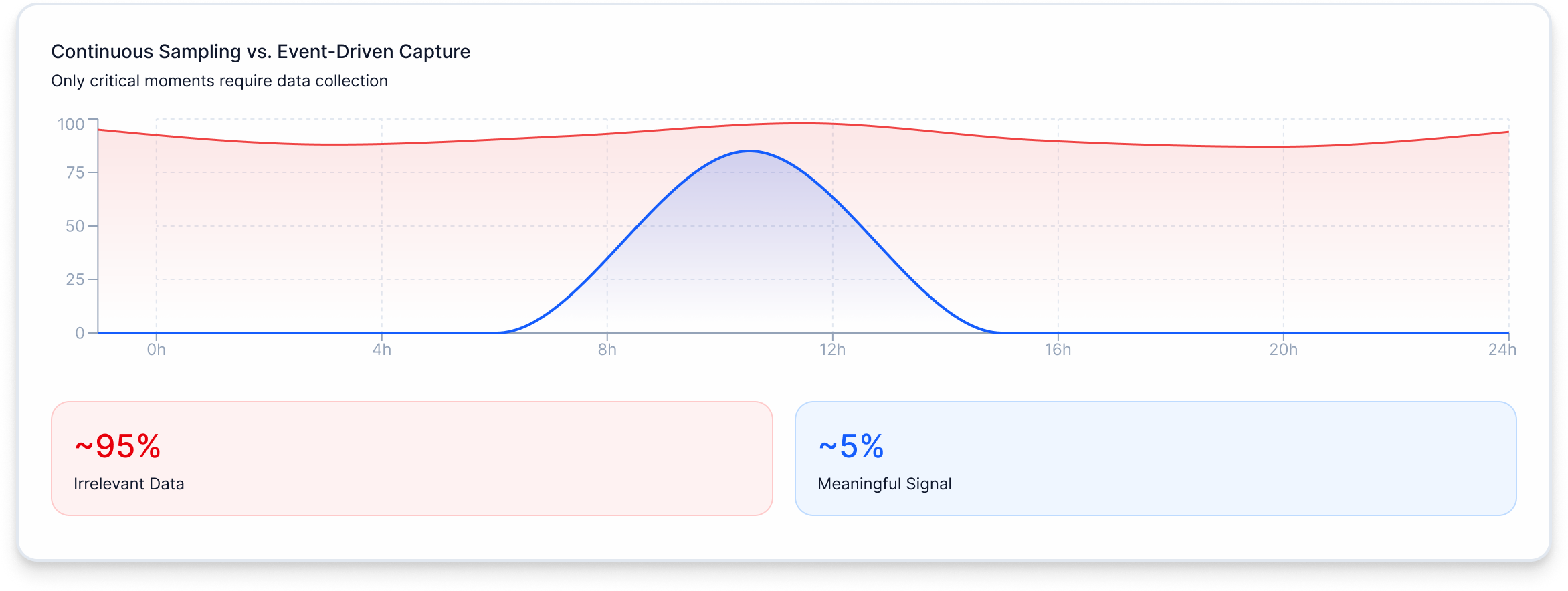

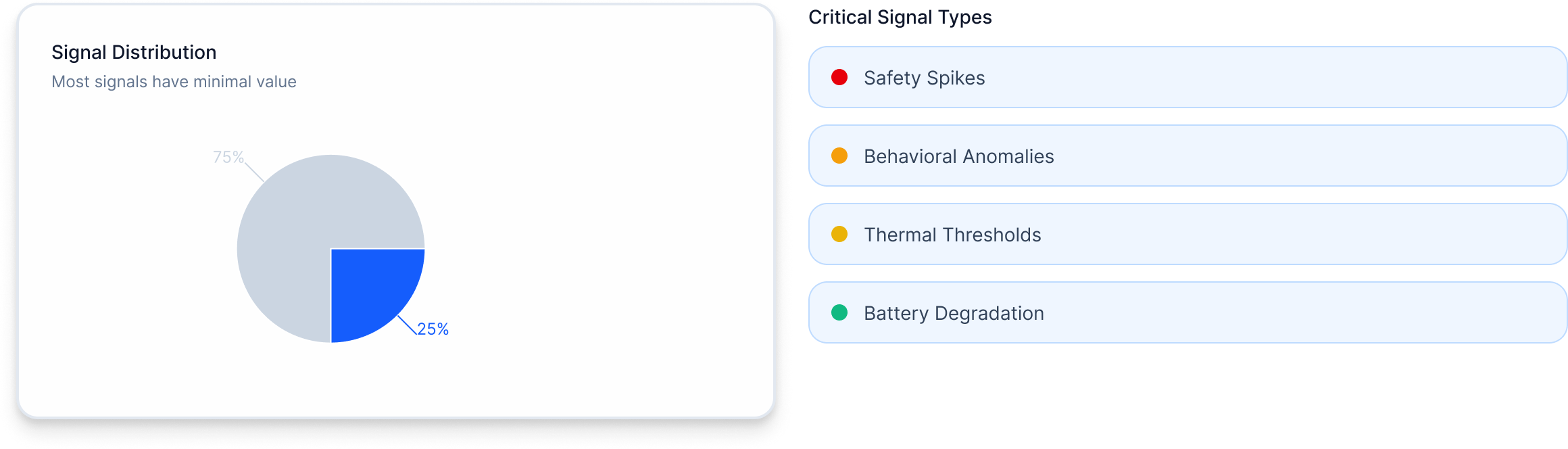

1/ Most telemetry is noise unless captured at the right moment

Continuous sampling collected vast amounts of data even when nothing meaningful was happening. This rarely aligned with real operational needs.

2/ Teams only needed a small subset of signals

Events like safety spikes, behavioral anomalies, thermal thresholds, or battery degradation mattered far more than routine readings.

3/ Processing closer to the source solved multiple problems at once

Evaluating conditions directly on the device reduced cost, improved responsiveness, and removed dependence on constant connectivity.

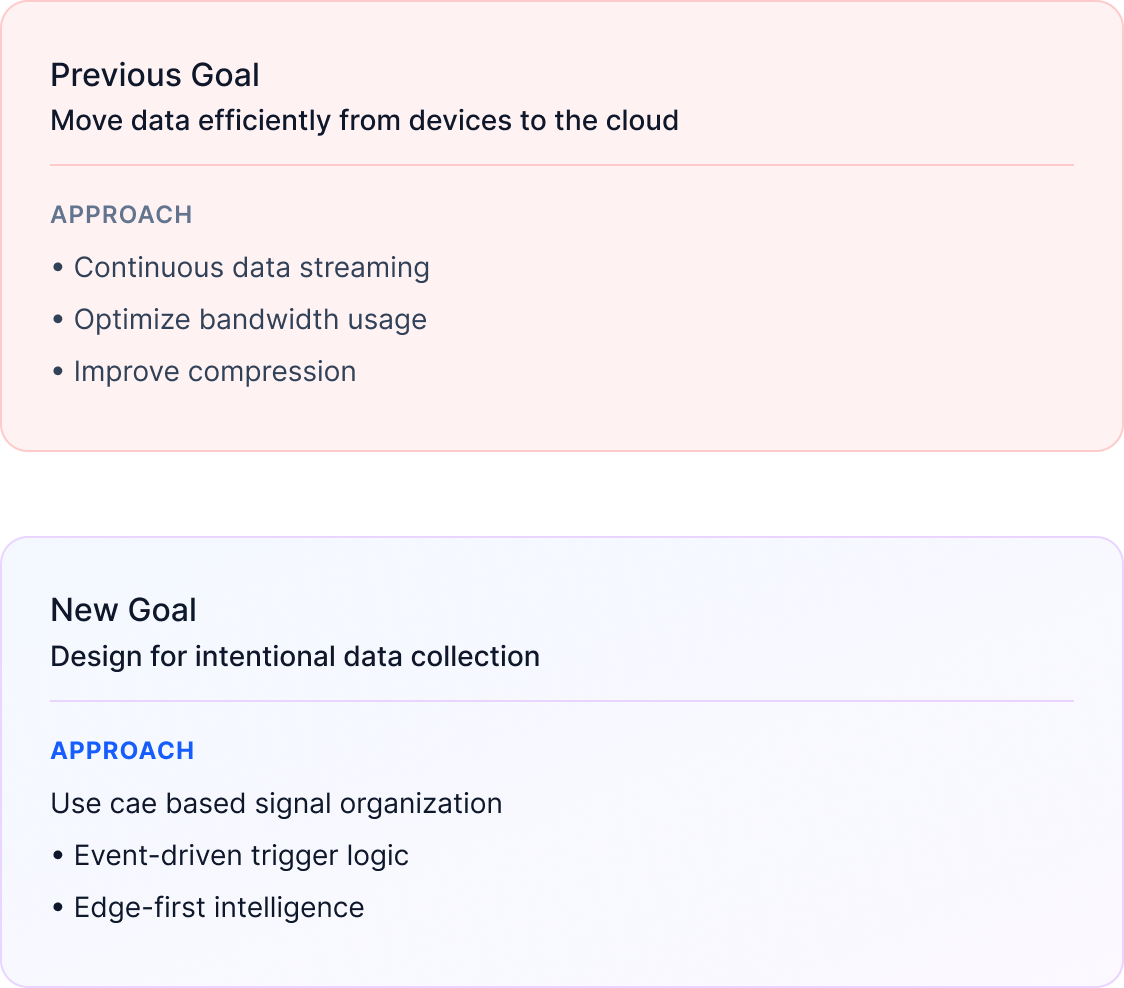

These insights reframed the challenge entirely. The goal was no longer to move data efficiently, but to design for intentional data collection.

The pivot

I proposed a radical shift in the user's mental model: The Edge-First Approach.

Instead of treating the vehicle as a passive pipe that dumps data into the cloud, what if we treated the vehicle as an intelligent filter?

I designed the Data Campaign Builder, focusing on three narrative pillars:

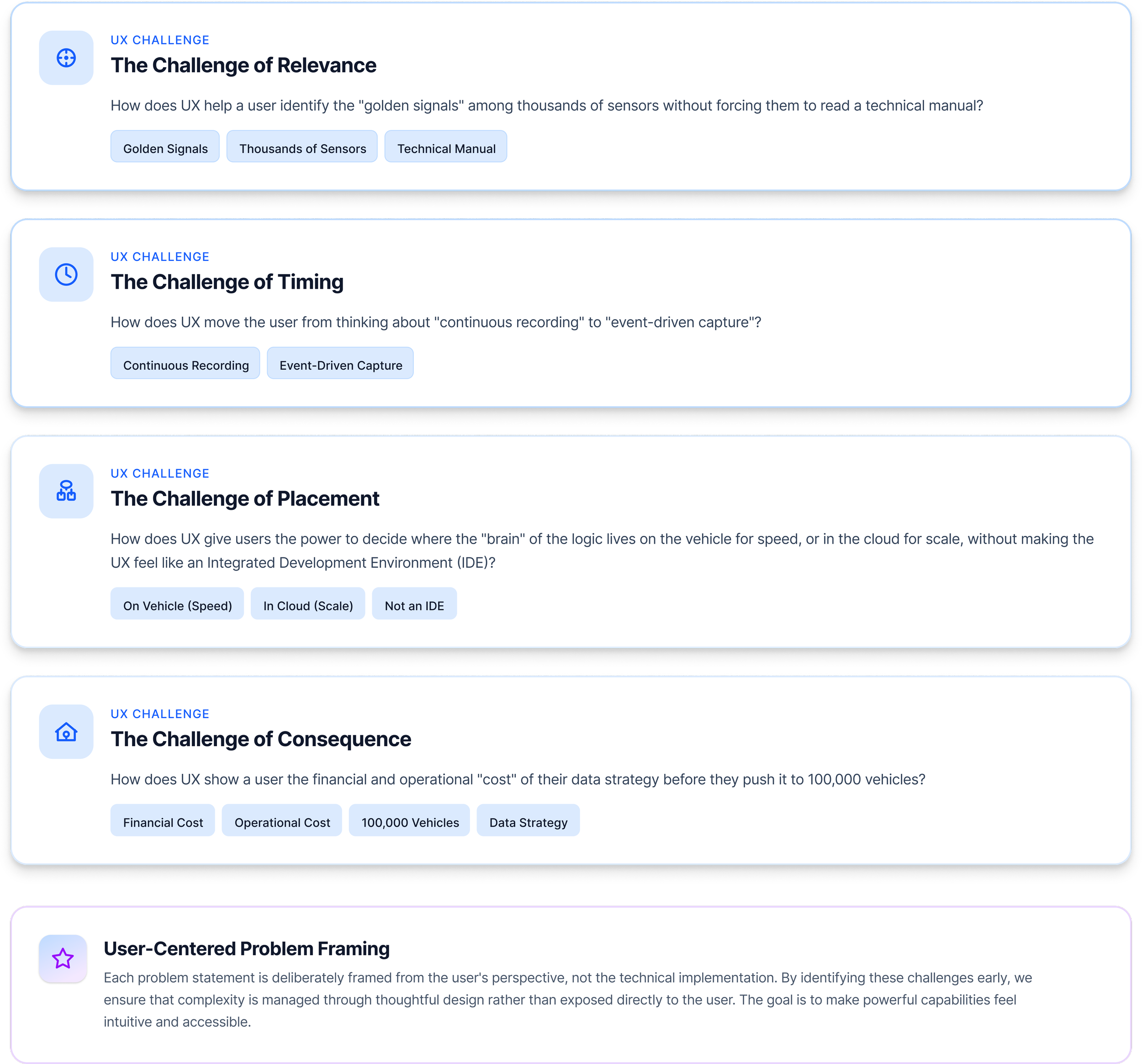

The Design Crux

This was never just a data-pipelining problem; it was a mental model problem. I realized that the interface had to act as a translator between three very different languages: the high-stakes precision of an Engineer, the tactical urgency of an Operator, and the fiscal scrutiny of a Business Stakeholder.

The Design Approach

I designed a guided configuration experience that put users in control of data volume, timing, and context, while abstracting away unnecessary system complexity. This approach improved both system performance and the economic viability of large-scale deployments.

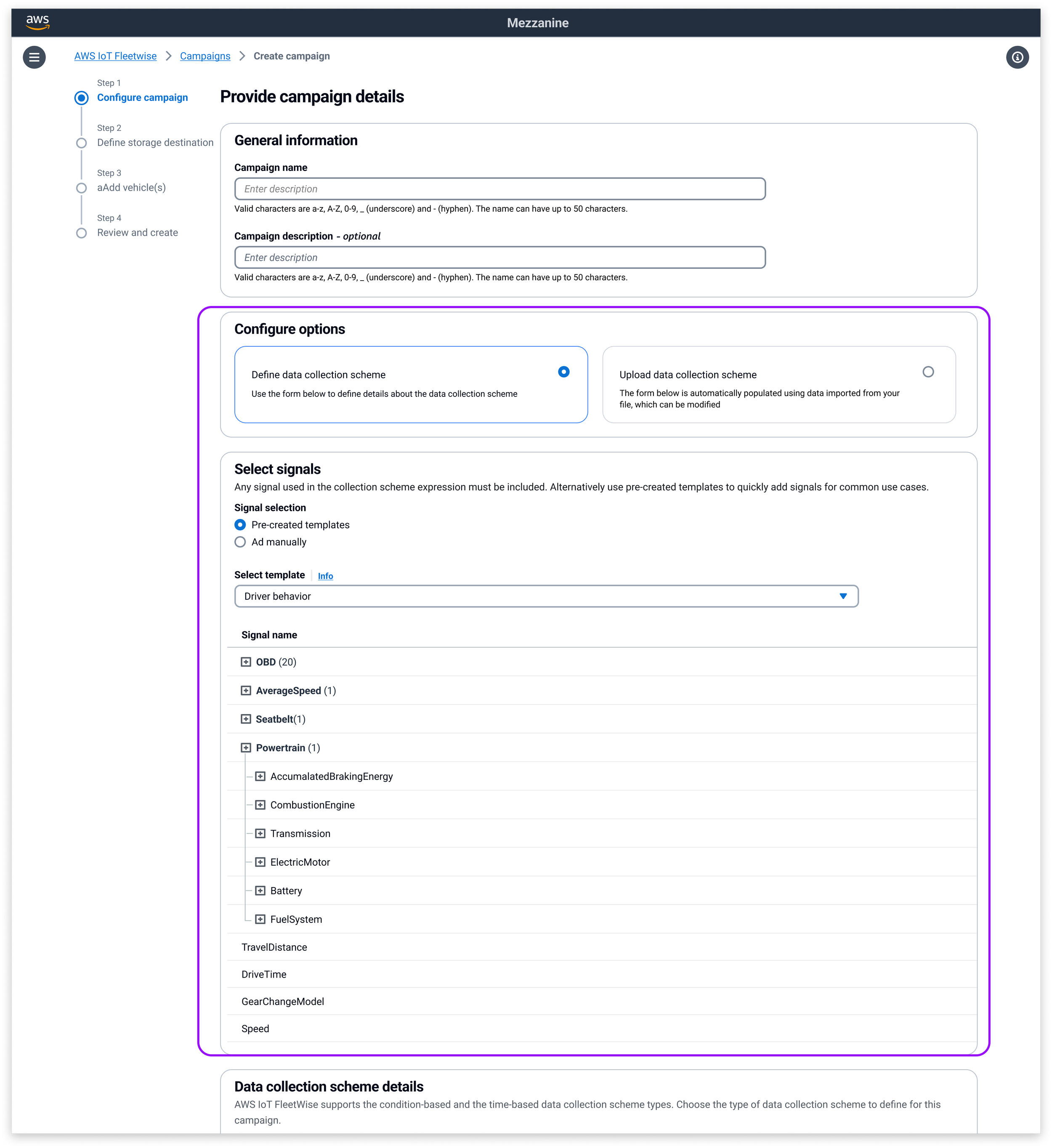

1/ Design Strategy: Use-case-based signal organization

Design intentionally explored two experience directions based on user needs.

For technical users such as vehicle and systems engineers, the experience supported direct access to detailed, raw signals for deep analysis and troubleshooting.

For non-technical users who rely on data to make fast business decisions, signals were organized around real operational use cases such as safety, driver behavior, diagnostics, drivetrain performance, and battery or thermal health.

This approach prevented users from being overwhelmed by thousands of low-level signals, while still ensuring that each persona could quickly find relevant data without requiring deep domain expertise.

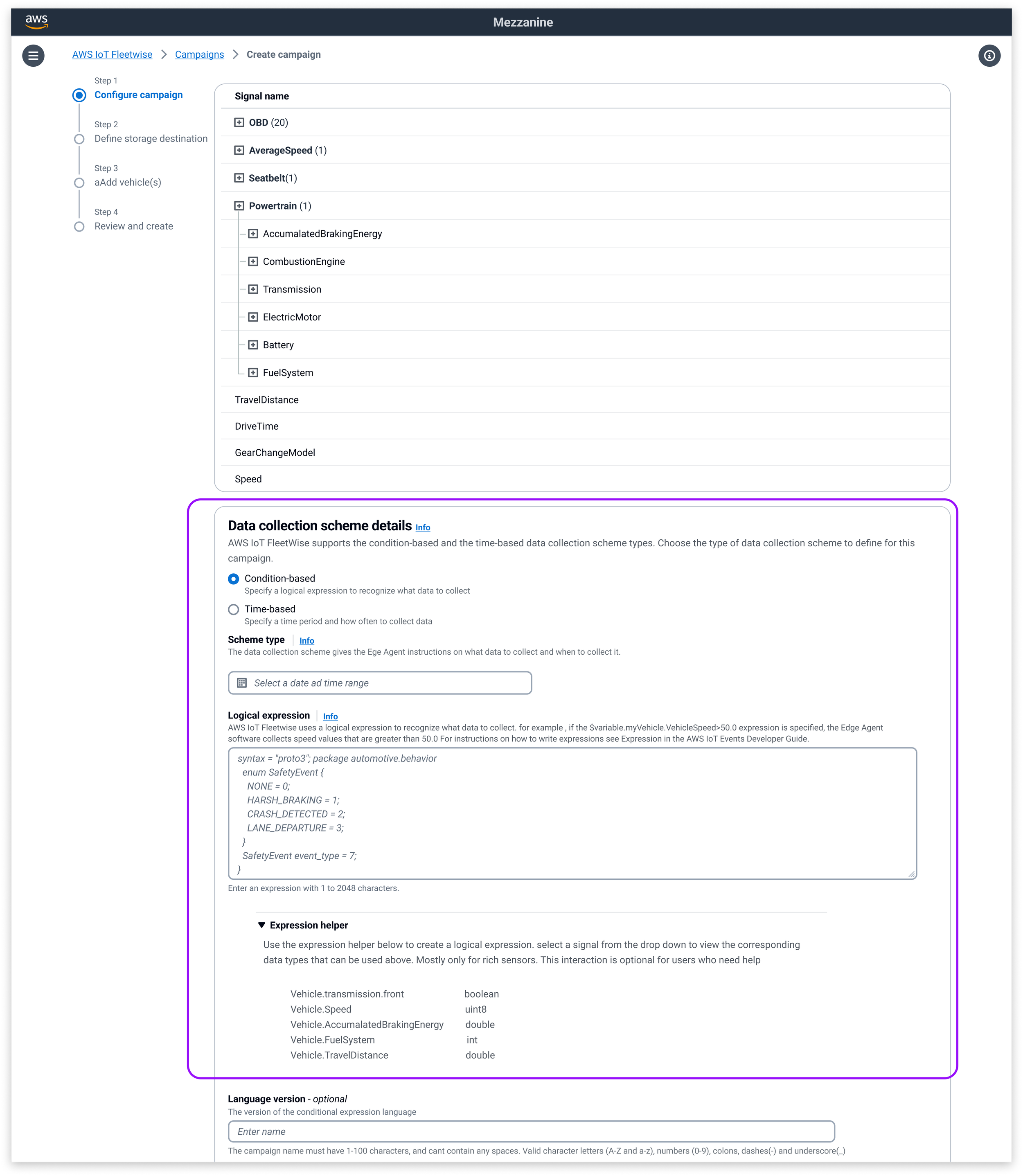

2/ Design Strategy: The "If This, Then Collect" Logic

Instead of collecting everything all the time, design flipped the model to start with intent.

If a meaningful event occurred—hard braking, overheating, rapid acceleration, or a critical threshold crossing—then data was captured.

If nothing noteworthy happened, nothing was collected.

This event-driven approach ensured data collection was purposeful rather than continuous, focusing attention on moments that actually mattered. Users no longer had to sift through streams of background noise; the system surfaced data only when behavior changed, risk increased, or action was required.

Traditional way: Send everything to the cloud, then filter.

My Design: Evaluate driver behavior. If

Speed >75m/hr, then start high-frequency ingestion.UX Impact: This reduced "noise" by 80% while ensuring zero-latency capture of critical events.

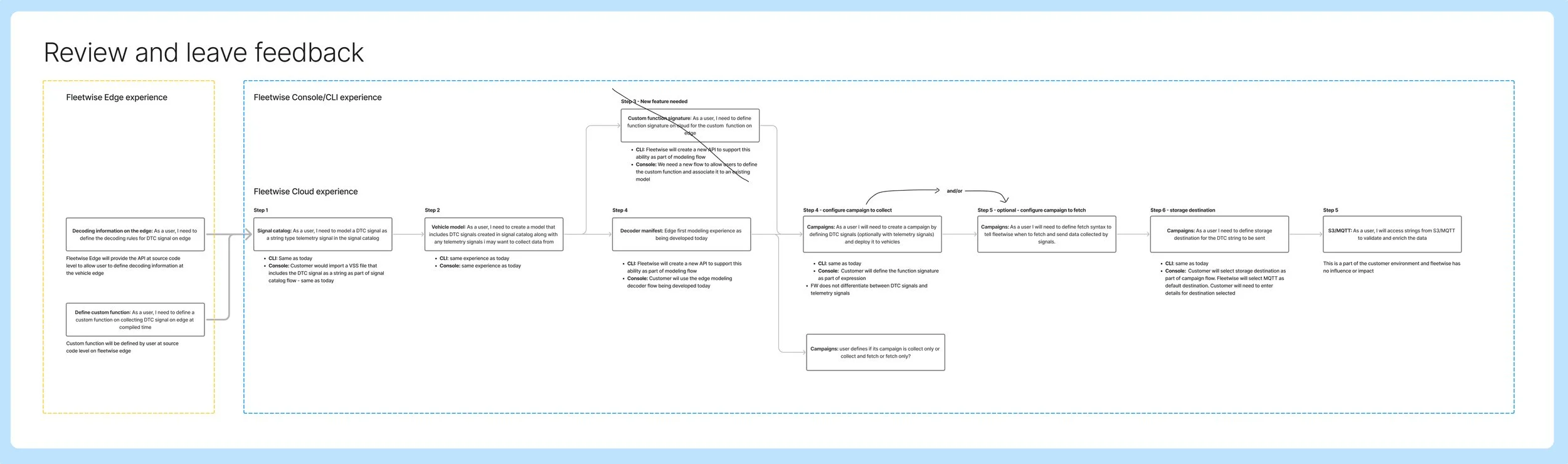

Aligning Teams

Shifting from cloud-first ingestion to selective, edge-aware collection required alignment across firmware engineers, cloud architects, product managers, and customer-facing teams.

Using workflow diagrams, and decision trees, I helped align teams around a simple principle:

Collect only what matters, and collect it as close to the source as possible.

This framing gave engineering clarity on feasibility, helped product prioritize early capabilities, and enabled go-to-market teams to clearly explain the value to customers.

North star v/s Phase 1

North Star vision: The 3D digital twin and seamless ecosystem

Where the system ultimately needed to go

The long-term goal was to evolve FleetWise into a fully intelligent rule engine capable of adapting to real-world complexity. In this future state, the system would support:

Multi-condition logic, allowing users to define rich rules across multiple signals

Adaptive sampling, where data collection responds dynamically to context

Templates for common scenarios, such as safety monitoring or diagnostics

Intelligent recommendations, helping users choose the right signals and thresholds

Hybrid edge and cloud evaluation, balancing immediacy with historical context

This vision set the direction for how data collection could become both smarter and more autonomous over time.

Phase 1 Release

What we shipped to earn trust first

Rather than attempting everything at once, we intentionally launched a stable, dependable foundation. Phase one focused on delivering immediate value while preserving reliability:

Basic signal selection grounded in real operational needs

Simple frequency controls that were easy to understand and predict

Single-condition triggers to ensure consistent and debuggable behavior

Edge-side evaluation for critical events, enabling faster response and lower cost

Clear cost awareness, so teams could understand impact before deploying

This approach allowed customers to succeed early, while creating a clear path toward more advanced capabilities without compromising trust.

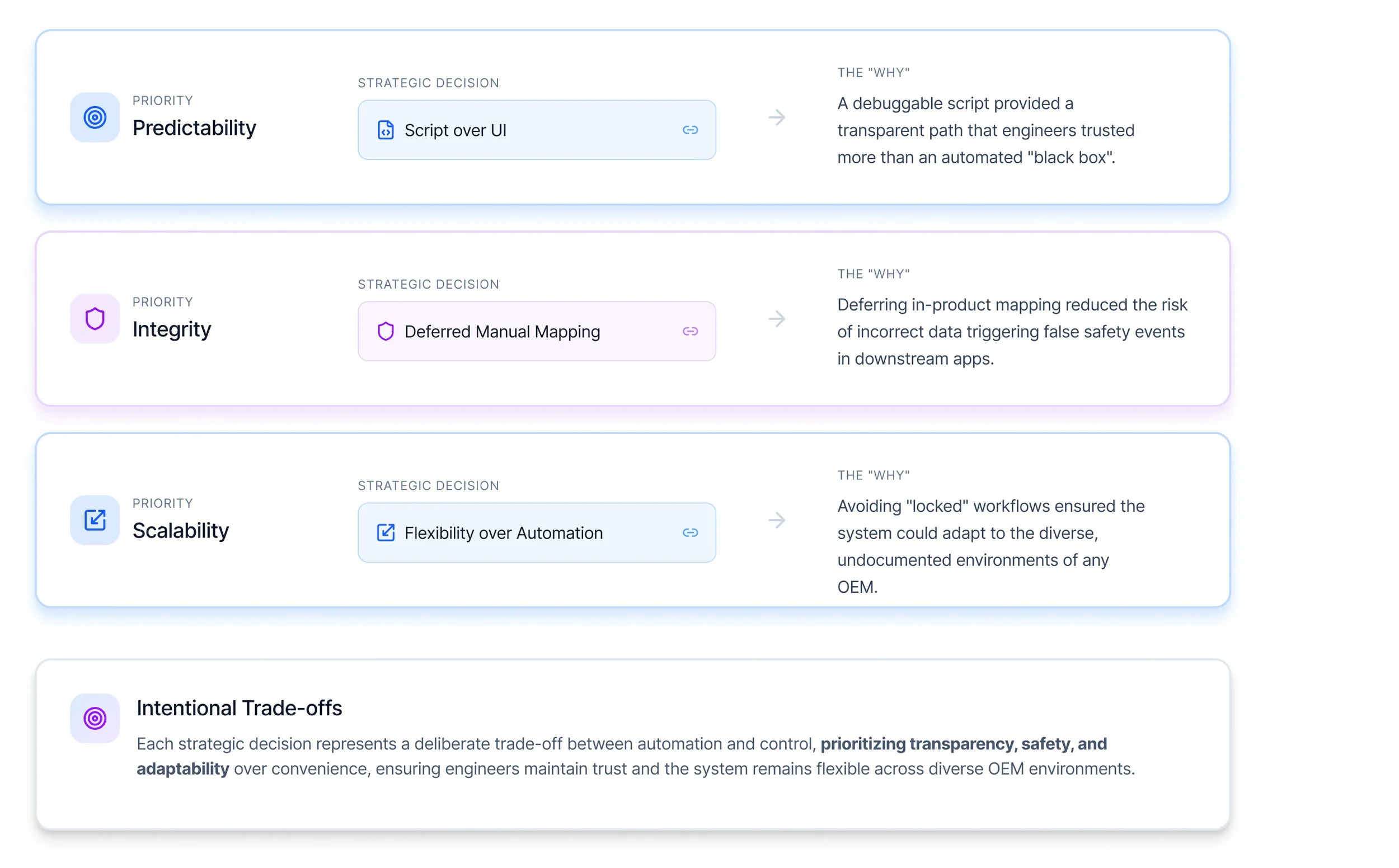

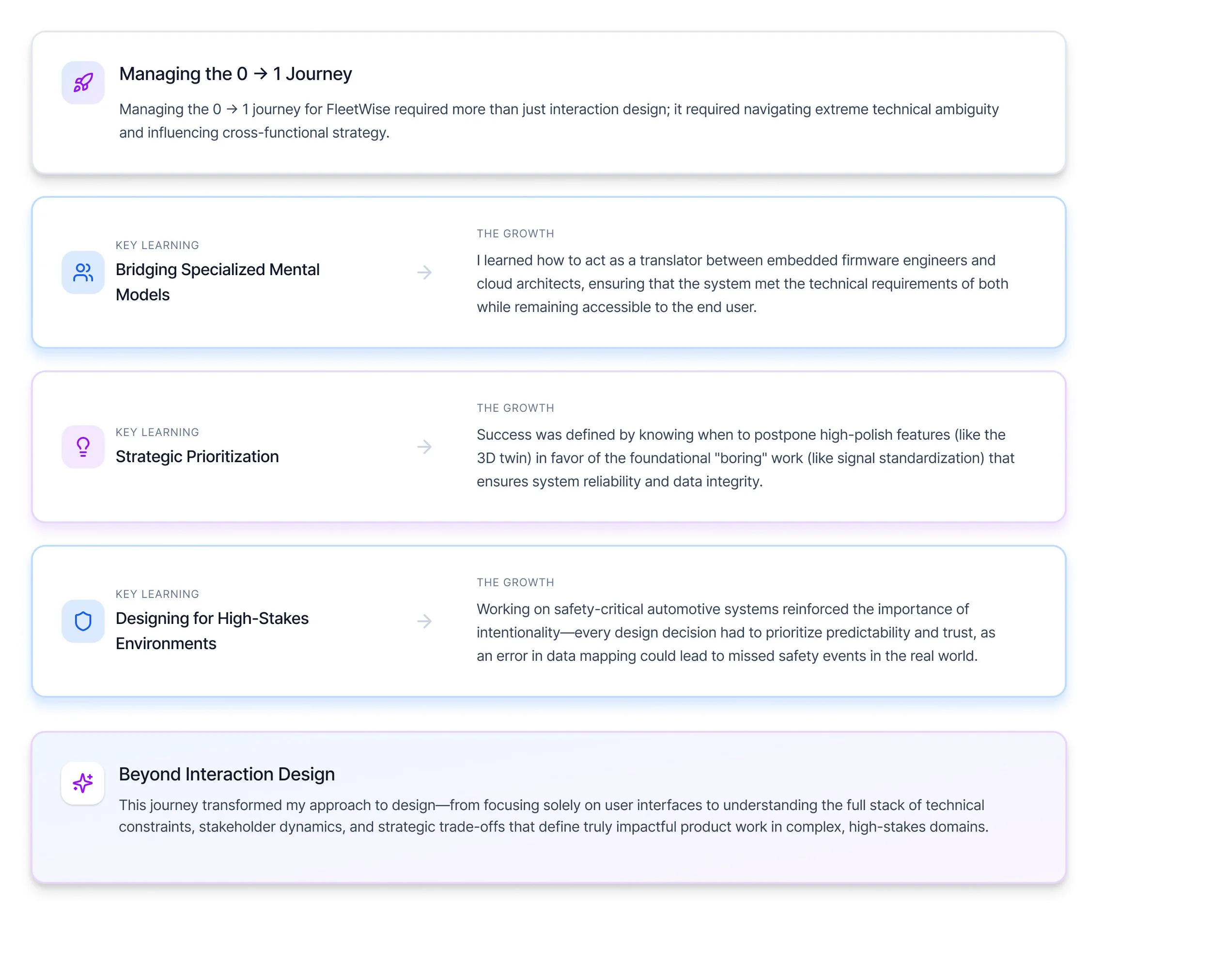

Strategic trade-offs

We deferred multi-condition rules to preserve reliability and predictability. We chose clarity over maximum configurability to avoid overwhelming early users. We proposed reusable templates, but postponed them until sufficient real-world data existed. And we prioritized edge-first logic, even though it required careful cross-team coordination, because it delivered the greatest long-term benefit.